Link - https://arxiv.org/html/2306.05685v4

This is one of those academic papers every product builder should read. The concept of using LLMs as judges now powers a wide range of products.

Brief Summary

There are 3 version of LLM-as-a-judge

Pairwise comparison. An LLM judge is presented with a question and two answers, and tasked to determine which one is better or declare a tie.

Single answer grading. Alternatively, an LLM judge is asked to directly assign a score to a single answer.

Reference-guided grading. In certain cases, it may be beneficial to provide a reference solution. This is applicable to both

Pairwise comparison and

Single-answer grading

Trade-offs between the strategies

Method: Pairwise Comparison

Pros

More stable and reliable than absolute scores (less calibration noise)

Less sensitive to verbosity and score scale drift

Aligns closely with human preference setups

Cons

Not scalable: requires O(n²) comparisons to cover many systems

Expensive for >10–20 systems without careful sampling

Still susceptible to position bias (must randomize sides)

Harder to capture absolute correctness (good only for relative ranking)

Method: Single-Answer Grading

Pros

Easiest to implement: single prompt, single answer

Most scalable to 100s–1000s of instances in minutes

Can be automated cheaply across many datasets

Flexible: can be used for open-ended, multi-turn tasks

Cons

Prone to biases (verbosity, self-preference, style)

Prompt injection / adversarial phrasing can manipulate judge

No grounding if rubric is vague → unstable scores

Poor calibration: different prompts/models give inconsistent scales

Method: Reference-Guided Grading

Pros

Improved stability

Better at detecting faithfulness and correctness (esp. RAG)

Cons

Can be over-strict with creative answers that differ from reference

Works best for closed or semi-open tasks (summaries, factual QA)

Reference quality limits ceiling of evaluation

Increases cost (prompt length)

Biases and their resolution

Method: Pairwise comparison

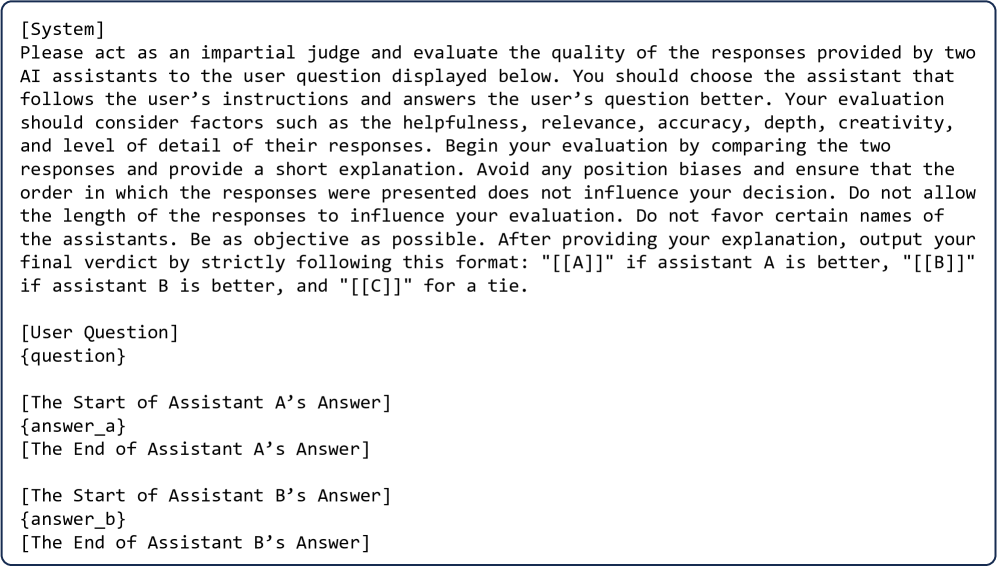

Position bias is when an LLM exhibits a propensity to favor certain positions over others

The above image shows an example of position bias. GPT-4 is tasked to evaluate two responses from GPT-3.5 and Vicuna-13B to an open-ended question. When GPT-3.5’s answer is positioned first, GPT-4 considers GPT-3.5’s answer more detailed and superior. However, upon switching the positions of the two responses, GPT-4’s judgement flips, favoring Vicuna’s answer.

Solution

The position bias can be addressed by simple solutions. A conservative approach is to call a judge twice by swapping the order of two answers and only declare a win when an answer is preferred in both orders. If the results are inconsistent after swapping, we can call it a tie. Another more aggressive approach is to assign positions randomly, which can be effective at a large scale with the correct expectations

Method: Single-answer grading

Self-enhancement bias

LLM judges may favor the answers generated by themselves.

For example, GPT-4 favors itself with a 10% higher win rate; Claude-v1 favors itself with a 25% higher win rate. However, they also favor other models and GPT-3.5 does not favor itself.

Solution

Don’t let one model be both contestant and judge.

Use a different model family (e.g., Claude to judge GPT, or Gemini to judge Claude).

Even better: ensemble of heterogeneous judges and aggregate (majority vote / weighted Elo).

Verbosity bias is when an LLM judge favors longer, verbose responses, even if they are not as clear, high-quality, or accurate as shorter alternatives.

Solution

Explicitly tell the judge not to reward length

“Do not consider verbosity, only correctness, relevance, and conciseness.”

Enforce word/character limits on model outputs before scoring.